The Gramps Web AI Chat Assistant on Grampshub

The AI Chat Assistant is a new experimental feature of Gramps Web that allows asking questions about a family tree to an AI chat bot. I’ve described technical details of its implementation and how to use it in two previous blog posts. As of today, AI chat is finally available on Grampshub.

Enabling the Gramps Web AI assistant

The steps for enabling the AI assistant for your tree on Grampshub are the same as for any other Gramps Web installation and have been described in the previous blog post. As tree owner, you need to create the semantic search index and then select the user groups allowed to use chat. If you are not the owner of your tree, please contact the tree owner if you are interested in trying out chat.

Note that generating the semantic search index can take hours – please run it only once!

AI models used on Grampshub

Gramps Web allows choosing different models and providers for AI models, so you might wonder what choice was made for Grampshub and why.

For the vector embedding model (if you want to understand the technical jargon, you may want to have a look at the first blog post), Grampshub uses the Gramps Web default – the open-source distiluse-base-multilingual-cased-v2 Sentence Transformers model. It works with over 50 languages, most likely also with yours! The vector embedding model runs locally on Grampshub’s virtual servers.

For the large language model (LLM), Grampshub uses OpenAI’s gpt-4o-mini via their API. You may wonder why we’re not using an open source model running locally for the LLM as well. The reason is that this is simply too expensive at present. Modern LLMs require powerful graphics cards (GPUs) that are very costly to operate all around the clock.

If you’re wondering what that means in terms of data privacy: first of all, AI chat is turned off by default and it’s totally up to you if you want to use it or not. Even when you use it, it does not mean that your entire tree is sent to a third party. Since the vector search index is computed locally, only the context relevant for a specific chat question is sent to the LLM. The communication with the LLM is encrypted in transport. And of course the data exchange respects European and German data privacy laws (Grampshub’s legal seat is in Germany); we’ve signed a GDPR data processing addendum with OpenAI for this purpose.

Importantly, none of your genealogical data will be used to train AI models, neither by OpenAI nor by Grampshub or the Gramps Web project.

AI chat in Grampshub subscriptions

For now, AI chat is available to all Grampshub subscribers. You may notice that, in the user settings, there is a new quota shown for AI. This is a Gramps Web feature counting the number of chat messages sent per tree and Gramps Web allows to set an upper limit. There is no such limit on Grampshub at the moment; we’ll monitor over the next months whether the average usage of the feature is reasonable to allow not limiting it.

If it turns out a quota is necessary, this will be handled like other limits, with a fixed quota (per time period) depending on the subscription. Since Grampshub is a hosting provider for Gramps Web and not a “freemium” app, there will not be extra charges or subscriptions with limited features!

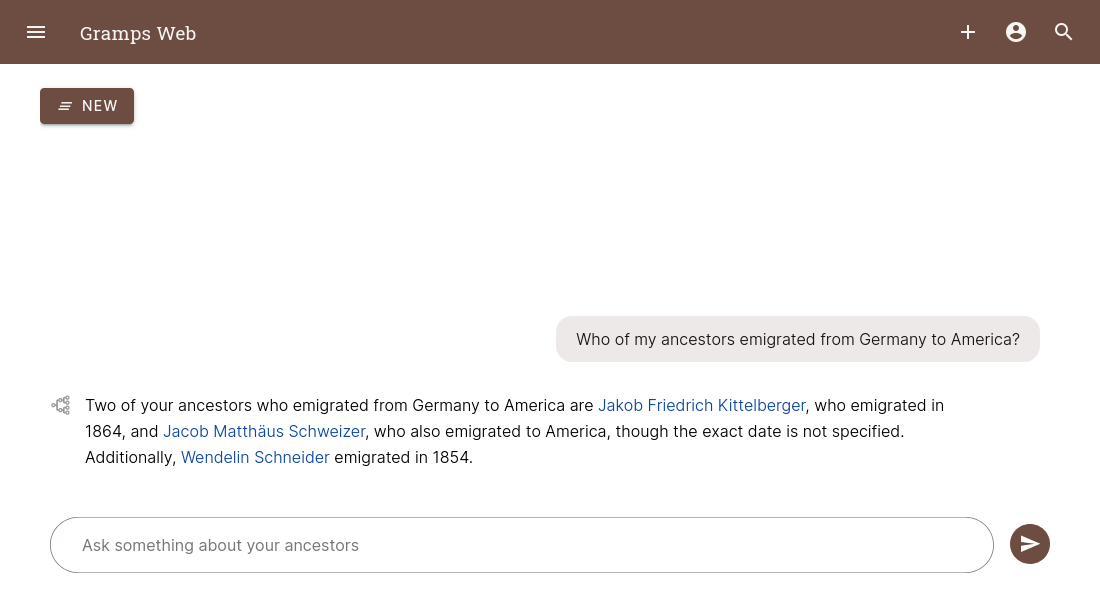

Try it out

Have fun trying out the new Gramps Web AI assistant – remember, it’s still in an early stage and not yet very powerful, so don’t expect too much, and always be skeptical about its answers. Do engage with the community to share your learnings and allow the Gramps Web developers to improve the assistant over time.